What´s the difference between compression, normalization and leveling? A walk-through of when to use what function, and where to find good applications for the task at hand.

Ever wondered why there are so many tools for treatment of volume and sound dynamics? Well this post aims to shine a light on why and what to use to get the proper job done.

Volume Differences & Treatment

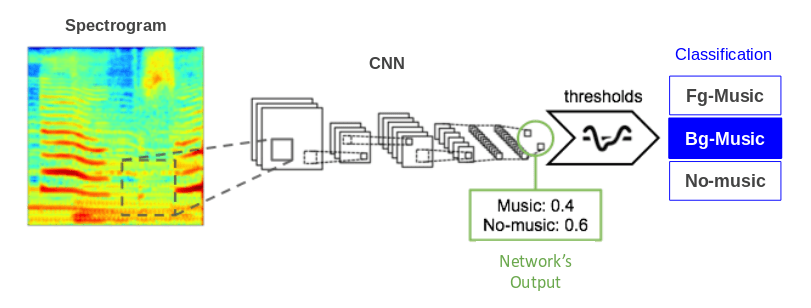

The core concept of audio dynamics treatment is to level out the differences in amplitude in the recording at hand. This is especially important today as we have an audio landscape in media where sound plays a big part in how we perceive information and often the quality of a production, whether it´s a video clip, a movie / series or a song on the ether. The 3 biggest conceptual methods for achieving a sound that is even and loud are called audio compression/limiting, audio leveling and audio normalization.

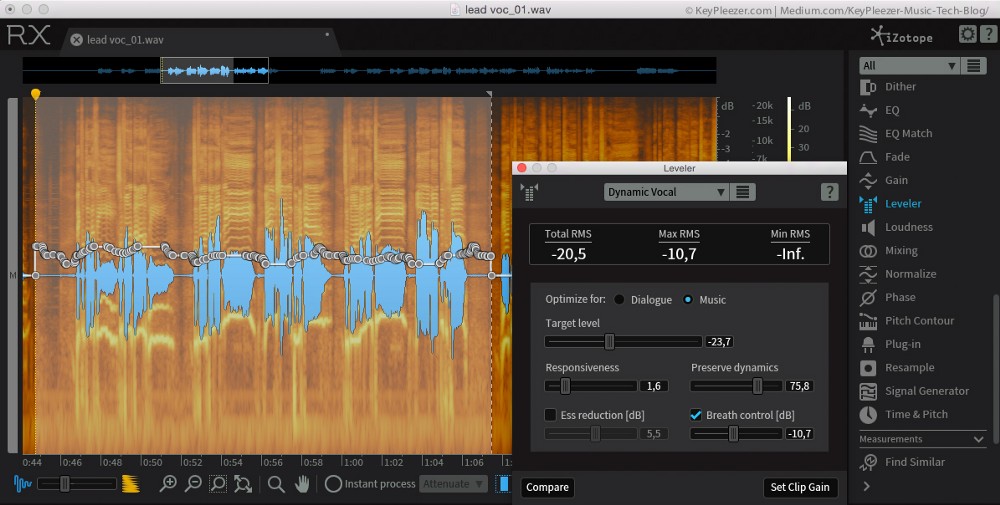

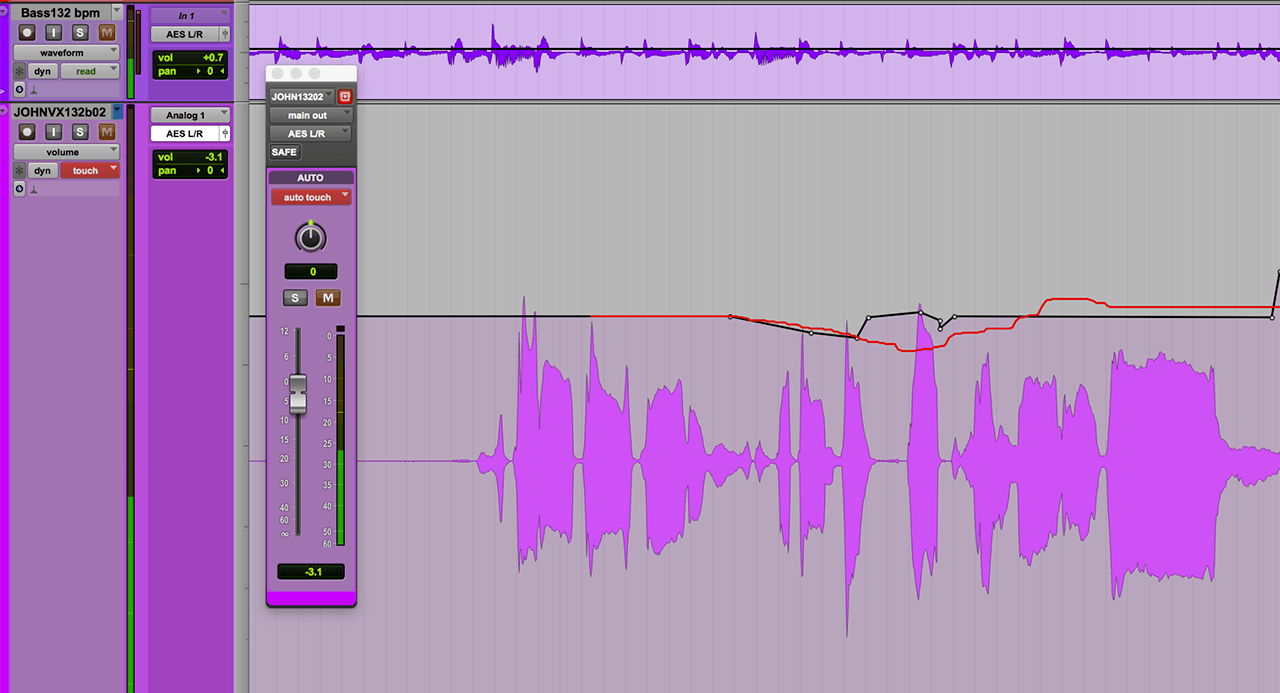

Audio compression (as in using an audio compressor, not lossycompression, like MP3) is not to be confused with normalization or leveling. While they are aimed in the same direction, which is to even out the differences in volume and amplitude within an audio recording, they go a bout it in different ways or handle different aspects of the process. Where compression/limiting is mostly aimed at handling the immediate dynamics and peaks, leveling usually does the job of changing the overall parts inside of an audio file to better match each other, producing a whole file that is better internally, as a block/clip. Audio normalization is aimed at raising or lowering the entire file/recording to a certain level. This is either from the Peak Level or the RMS level of the file.

Audio compression & compressors / limiters

So, audio compressors use the peaks or the immediate RMS levels (basically the general perceived volume of sound peaks) to stop sound from getting too loud. The compressor usually stops it in more fine ways, like with the option to set more parameters such as attack, release and knee functions, whereas the limiter does the job of stopping all sound at a given level, usually at 0 dBFS. This is used to stop the sound of a voice recording, a guitar or a whole mix from reaching a level where the sound would create digital clipping, which sounds horrible and flattens the sound peaks, leaving little detail.

Learn more about audio compression and limiting for music, podcasting and video here!

Audio Peak and Loudness Normalization

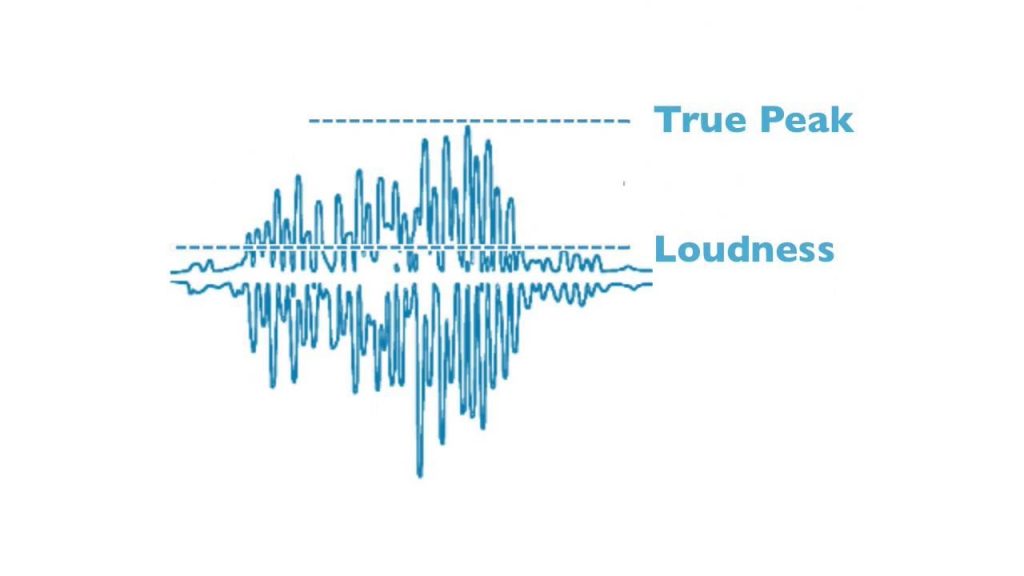

In contrast to compression, the normalization process does not do any dynamics processing of any sort, not as a general rule. It only raises the volume of the entire sound clip, or recording, to a specified level. The default of most music mixing, recording or mastering applications would be 0 dBFS.

Nowadays, with broadcast media and online streaming, a necessity to normalize sound volume based on a loudness standard, instead of the peak or even RMS levels of recordings or mixes, has given way to new Loudness Normalization standards and recommendations. There are numerous loudness standards for broadcast media and it depends on the geographical market in question and the material at hand whether to adhere to one standard or another. The concept of Normalization can also be split into Loudness Normalization and Peak Normalization, where the first would most avidly be used for broadcasting and video, the latter for music production.

Another important usage of Loudness normalization is while mastering music, where it´s used heavily to even out the perceived volume levels between different masters, so volume does not cloud the perception of the listener. Normally louder sounds sound better to most people.

Another use for it is to treat a large number of MP3 files, to even out the difference in volume throughout the library. It´s a common function included in most format conversion tools that convert wav/aif/caf files into compressed formats for web distribution or streaming, such as MP3, M4A/AAC and OGG.